Based on the technical architecture of my digital home I’m currently running 42 micro-services. Each micro-service has a special role in our daily lives. This article provides an overview of the use-cases that these micro-services enable.

I have multiple goals with these use-cases:

- Comfort: automating daily routines, especially the ones that are highly repetitive and require extensive steps;

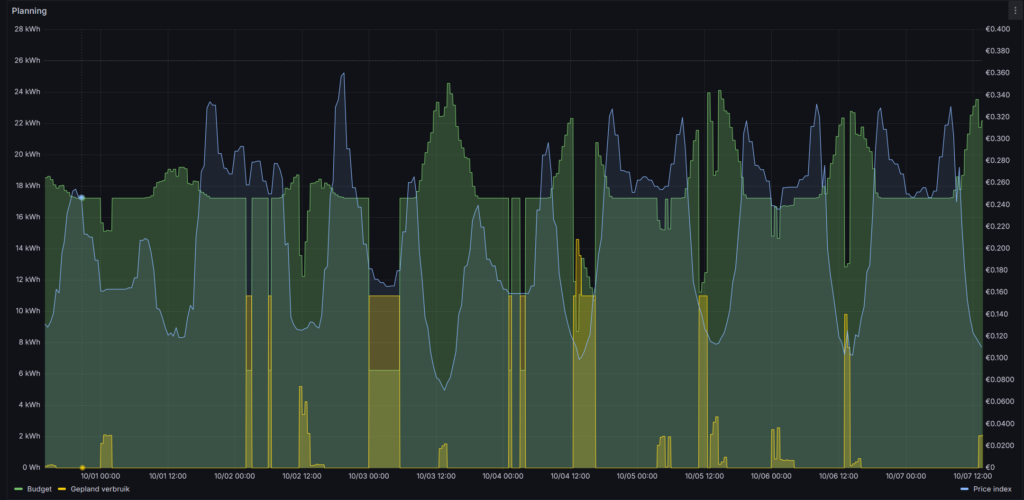

- Reducing energy cost: we have a fully electric house (with the exception of the second car) and consume a lot of energy. Prices are dynamic and with planning our consumption we can reduce our energy cost;

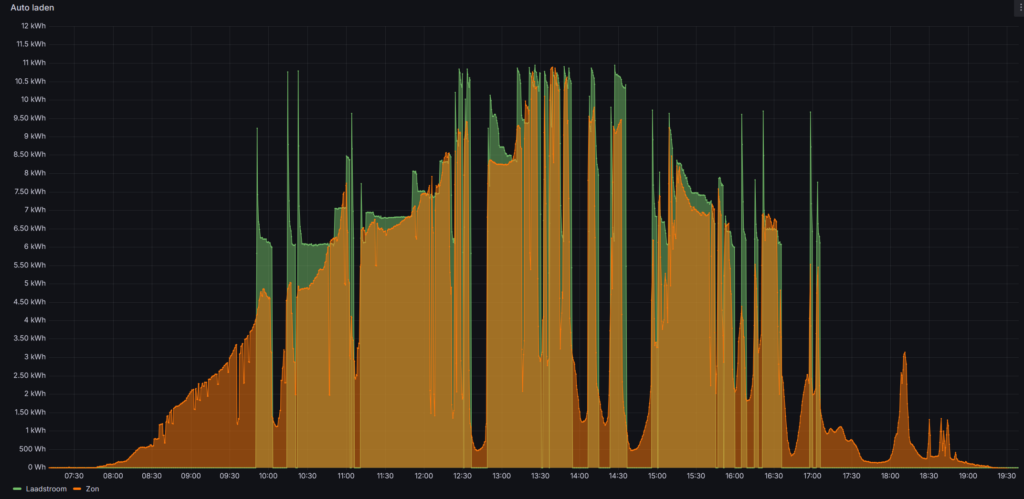

- Reducing grid load: we are living in an electrified environment (with the exception of the second car) and consume a lot of energy. We try to consume energy outside of grid congestion hours (between 16.00 and 21.00) and take the availability of solar energy into account.

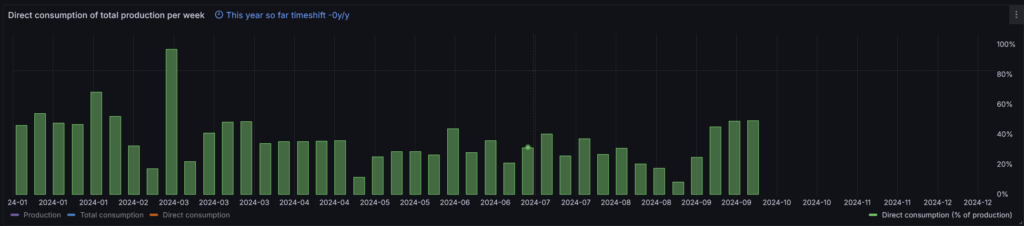

It's important to understand I'm using a price index to select the best moment of the day to consume energy. I calculate the price index based on the EPEX energy prices and the expected amount of solar energy.

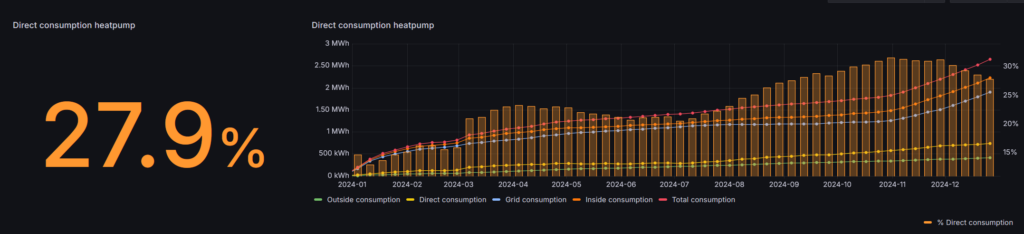

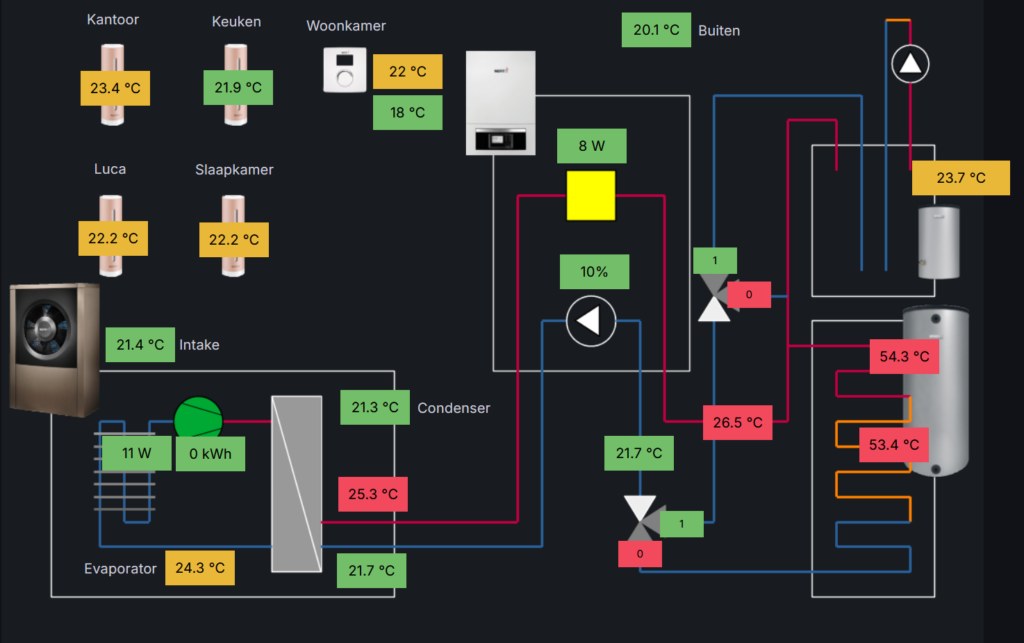

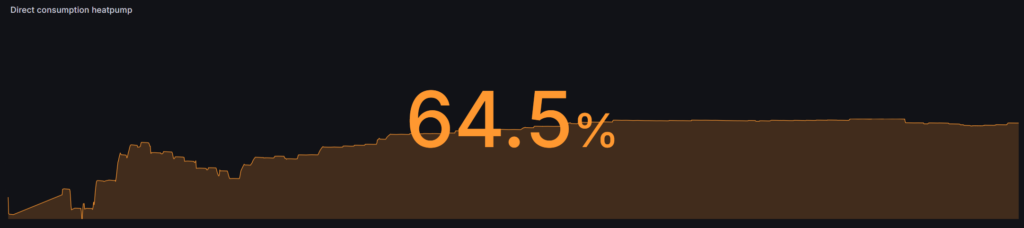

- Heating optimization: when winter comes I calculate the expected number of hours the heatpump requires to keep our home comfortable. Based on the energy price index the heatpump is switched on. Additionally, when a lot of sun is expected heating is disabled and the sun heates up our home during the morning, sometimes reaching a comfortable 21C when it’s freezing outside;

- Hot water optimization: hot water generation is planned based on the current water temperature and the energy price index;

- Ventilation optimization: we have six CO2 sensors in our home. The ventilation system is controlled based on the input of the CO2 sensors. The ventilation slows down when we are not at home to save energy in the ventilation system itself as well as heating losses;

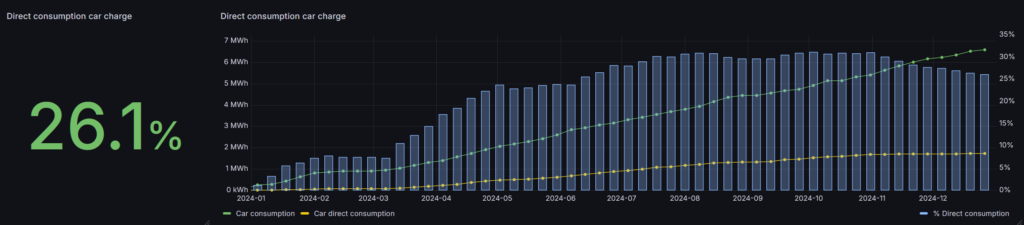

- Car charging planning: car charging is planned based on the planned time to leave the next day, battery charge state and the energy price index;

- Appliance planning: the dishwasher, washer and dryer programs are planned based on the time of the day the program is being started and the energy price index;

- Security alarm system: the security alarm system is automatically armed when we leave. Camera snapshots are send to our mobile devices when the virtual perimeter alarms are triggered, motion detectors are triggered, or when persons approach the front door;

- Light control: lights are automatically controlled based on the time of day, position of the sun (season), motion detectors, and state of the security alarm system;

- Solar screen control: solar screens are automatically controlled based on the weather predictions and inside temperature;

- Beer/drinks fridge control: our outside fridge only turns on on Friday, Saturday and Sunday when temperatures reach over 20C and sun is expected;

- Radio automated start: this was an annoyance: the radio is automatically started when the Chromecast comes online. No more apps to manually control the radio. In the weekend another radio channel is selected to switch from working to weekend mode;

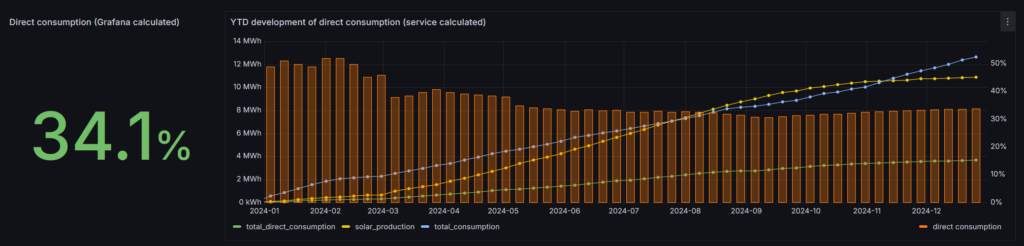

- Energy monitoring: 50 energy monitoring sensors ranging from the consolidate building energy consumption, freezer, fridge, lighting, car, heatpump, solar panels, TV and IT equipment.

- Automatically turn off solar energy production when energy prices are negative;

I still have a short “wish” list:

- When financially sound introduce a battery in the environment to further reduce energy consumption from the grid;

- Keep an eye on traffic speed on the main road next to our house;

In general I’m in an optimization phase. Most use-cases are up and running and require regular tweaking and fine-tuning. Sometimes optimization means taking 10 minutes to change an algorithm and push the micro-service into production. Sometimes it means two weeks of (evening) work to fundamentally change the architecture of a use-cases. It’s a hobby project, it keeps me from the street and challenges my technical skills.